Automated Scoring

A visionary approach to automated scoring of educational assessments.

- Accuracy

- Consistency

- Agility

- Efficiency

Our automated computer scoring services are backed by research and are unparalleled in the field.

Pearson’s automated scoring solutions

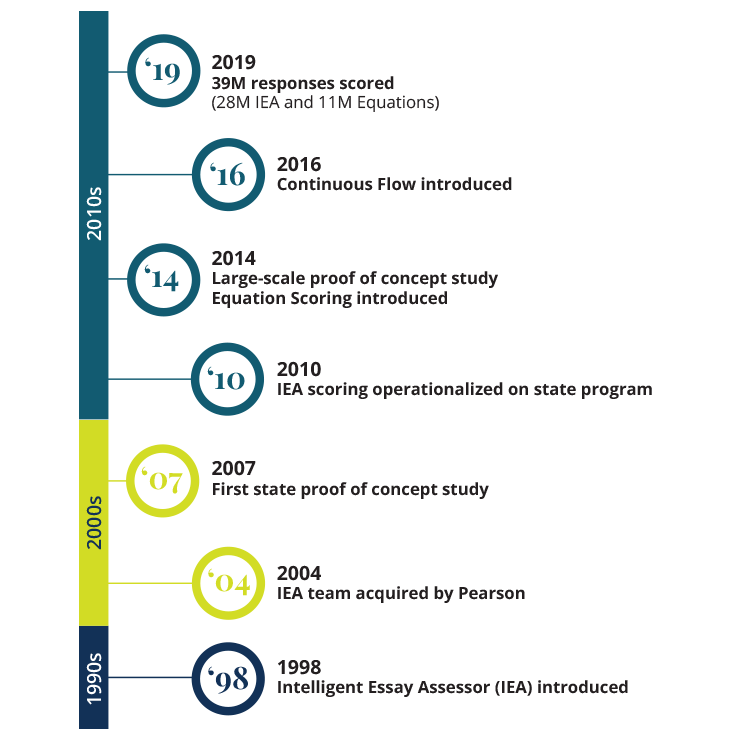

Intelligent Essay Assessor

IEA uses machine learning and natural language processing to score essays and short answers in the same way human scorers do.

Versant

Versant is used to assess spoken English language proficiency based on patented technology.

Equation Editor

The equation editor enables students to enter proper mathematical notation not supported by traditional keyboards.

Math Reasoning Engine

The Math Reasoning Engine automatically evaluates open-ended mathematical equations and expressions by reasoning about a student’s math.

Scoring innovation: Continuous Flow

Continuous Flow is Pearson’s patented, industry-leading scoring solution that flows responses dynamically between humans and automated scoring to optimize scoring quality, efficiency, and value. Based on rigorous research, Continuous Flow has successfully scored millions of large-scale summative assessments since 2015.

The benefits of Continuous Flow

- Automated scoring is used alongside human scoring.

- Challenging responses instantly route to human scorers.

- The engine learns from humans in real time.

With our Continuous Flow approach to scoring, human scorers begin the scoring process and the Intelligent Essay Assessor (IEA) learns from them.

Selected research and white papers

Hauger, J., Lochbaum, K.E., Quesen, S., & Zurkowski, J. (2018, June). Faster and Better: The Continuous Flow Approach to Scoring. Presented at the National Conference on Student Assessment (NCSA), San Diego, CA.

https://ccsso.confex.com/ccsso/NCSA2018/

mediafile/Presentation/Session5593/

CCSSO_faster_better_FULL_06272018.pdf

Flanagan, K., Lochbaum, K.E., Walker, M., Way, D., & Zurkowski, J. (2016, June). Continuous Flow Scoring of Prose Constructed Response: A Hybrid of Automated and Human Scoring. Presented at the National Conference on Student Assessment (NCSA), Philadelphia, PA. https://ccsso.confex.com/ccsso/2016/

webprogram/Session4615.html

Foltz, P. W., Streeter, L. A. , Lochbaum, K. E., & Landauer, T. K. (2013). Implementation and applications of the Intelligent Essay Assessor. In Handbook of Automated Essay Evaluation, M. D. Shermis & J. Burstein (Eds.), pp. 68-88. Routledge: New York.

Folz, P. W. (2007). Discourse coherence and LSA. In T. K. Landauer, D. McNamara, S. Dennis, & W. Kintsch, (Eds.), LSA: A road to meaning. Mahwah, NJ: Lawrence Erlbaum Publishing.

Landauer, T. K., Laham, R. D. & Foltz, P. W. (2003a). Automated Scoring and Annotation of Essays with the Intelligent Essay Assessor. In M. Shermis & J. Bernstein, (Eds.). Automated Essay Scoring: A cross-disciplinary perspective. Mahwah, NJ: Lawrence Erlbaum Publishers.

Landauer, T. K., Laham, R. D. & Foltz, P. W. (2003b). Automated Essay Assessment. Assessment in Education, 10,3, pp. 295-308.

Landauer, T. K., Laham, D. & Foltz, P. W. (2001). Automated essay scoring. IEEE Intelligent Systems. September/October.

Landauer, T. K, Foltz, P. W. & Laham, D. (1998). An introduction to Latent Semantic Analysis. Discourse Processes, 25(2&3), 259-284.